Newsletter #9: Herbstsemester 25

Welcome

Dear members of the Department of Psychology,

The 9th newsletter of the Open Science Initiative at the Department of Psychology promises to be an engaging read!

In this edition, we bring you news about two upcoming events at the Department of Psychology—a lunch talk and a day dedicated to "replication games"—and we also spotlight a valuable tool for ecological momentary assessment.

Please feel free to send any questions, suggestions, or contributions for this newsletter to openscience@psychologie.uzh.ch. The next newsletter will be released at the beginning of FS26.

Warm regards,

Your Open Science Initiative

Topics

Table of contents

Mark Rubin’s Upcoming Lunch-Talk on Questioning Some Open Science Assumptions

We are delighted to announce that Mark Rubin will be delivering an online talk on October 20th, from 12:15 to 1:15 pm. You can join us in room BIN 3.D.27, or participate from anywhere in the world using this link: https://uzh.zoom.us/j/61495526609?pwd=kTV911lDszauKSnI566DXjbVQV625s.1.

Mark Rubin is a psychology professor at Durham University, UK, and has published numerous articles on issues related to the replication crisis in science. Notably, he has argued that engaging in questionable research practices, such as HARKing (i.e., hypothesizing after the results are known), and undisclosed multiple testing, is not always problematic (e.g., Rubin, 2020, 2022, 2023). He has also critiqued certain science reforms, like preregistration and strict adherence to Neyman and Pearson’s statistical hypothesis testing approach. Nonetheless, he generally supports other open science reforms, such as open access articles, preprints, and open data and materials, in postpositivist science. For more on Mark's work in this area, please visit: https://sites.google.com/site/markrubinsocialpsychresearch/replication-crisis.

In his talk, Mark will challenge several mainstream assumptions held by proponents of open science. These include: (1) exploratory research is more “tentative” than confirmatory research; (2) questionable research practices, such as HARKing, are bad; (3) researcher bias is bad; (4) undisclosed multiple testing is bad; and (5) publication bias is bad. He will discuss these and other assumptions, suggesting they should not be accepted uncritically.

We hope you are open to this alternative perspective on open science and will join us on October 20th!

- Rubin, M. (2020). Does preregistration improve the credibility of research findings? The Quantitative Methods for Psychology, 16, 376-390. https://doi.org/10.20982/tqmp.16.4.p376

- Rubin, M. (2022). The costs of HARKing. The British Journal for the Philosophy of Science, 73, 535 -560. https://doi.org/10.1093/bjps/axz050

- Rubin, M. (2023). Questionable metascience practices. Journal of Trial & Error, 4. https://doi.org/10.36850/mr4

Open Science in Action: Ecological Momentary Assessment (EMA)

If you utilize Ecological Momentary Assessment (EMA), also known as the Experience Sampling Method (ESM), you're in luck!

Today, we highlight a tool and a framework that serve as open-source software for conducting intensive longitudinal survey research via smartphones. SEMA3 is primarily used for EMA/ESM and is available at no cost to both researchers and participants. Developed and maintained by researchers at the University of Melbourne, it has been recognized with an award from the Society for the Improvement of Psychological Science for its contributions to psychology.

Fittingly, Revol and colleagues (2024) have developed a comprehensive framework, including a tutorial website, an R package, and reporting templates for preprocessing ESM data.

- Revol, J., Carlier, C., Lafit, G., Verhees, M., Sels, L., & Ceulemans, E. (2024). Preprocessing experience-sampling-method data: A step-by-step framework, tutorial website, R package, and reporting templates. Advances in Methods and Practices in Psychological Science, 7(4), 25152459241256609. https://doi.org/10.1177/25152459241256609

Replication Games in Zurich on January 19th, 2026

If you’re keen on gaining practical experience in assessing the reproducibility and robustness of research findings, mark your calendar for a day of Replication Games at the UZH Department of Psychology. All articles published in Nature Human Behaviour are eligible, except those previously used in a Replication Game event. Additionally, there will be a list of other topics and journals to choose from for the event.

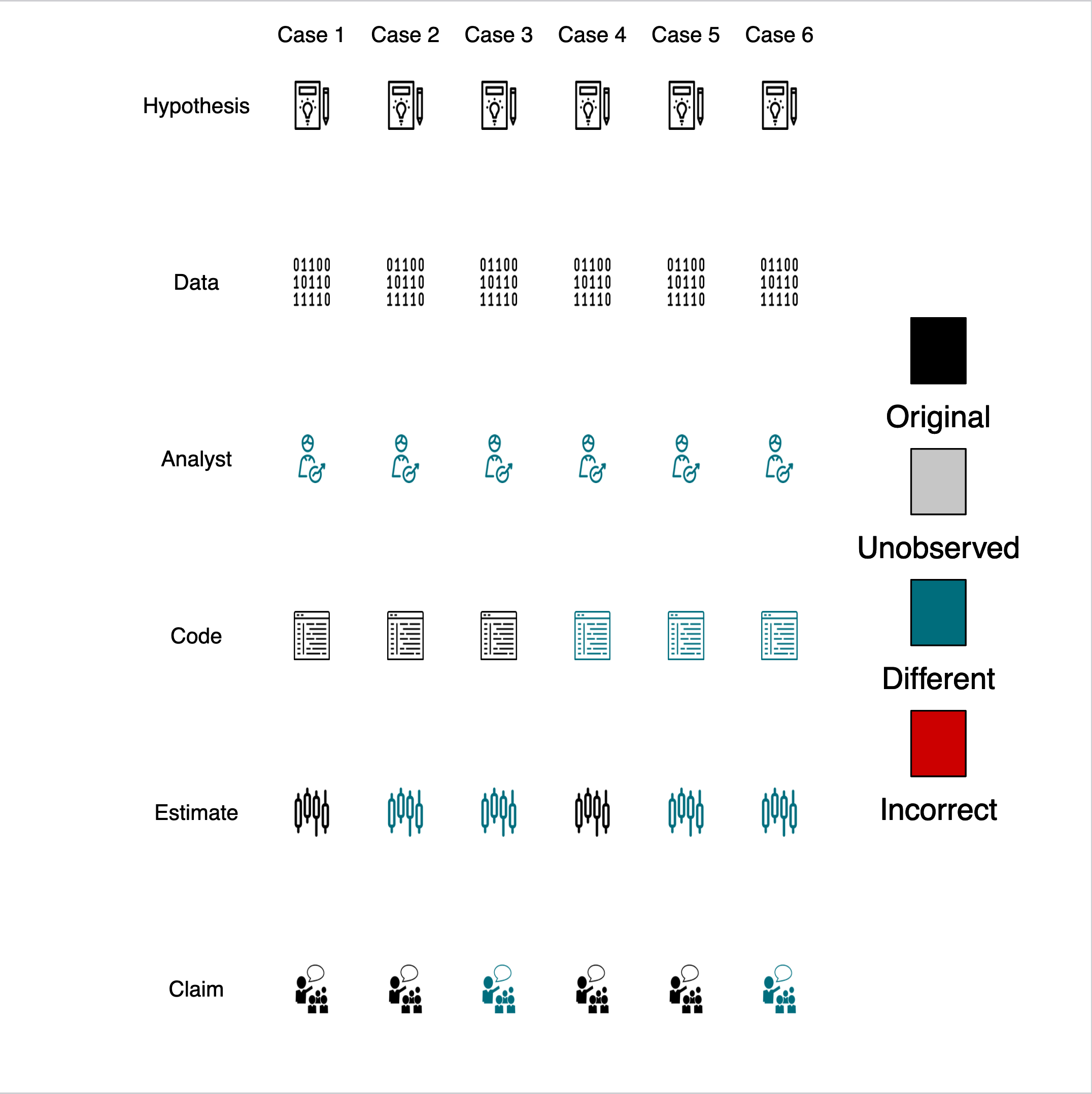

The figure below (created with scifigure, see Patil et al., 2019) illustrates what might occur when a different analyst (i.e., you!) uses the data published alongside the original article to reproduce the results. Cases 1 to 3 involve using the original analysis code to verify computational reproducibility, while Cases 4 to 6 focus on applying alternative plausible analytic methods (i.e., modifying the code) to test the robustness of the results.

Exploring the reproducibility and robustness of research findings is an active area of study (see Heyard et al., 2025). Engaging in the Replication Games offers valuable experiences for everyone, from graduate students to professors. It presents an opportunity to network and enhance your data-handling skills. Participation not only contributes significantly to the scientific record but also allows you to learn how other researchers ensure their work is reproducible (and assess their success).

Registration will open in a few weeks. Meanwhile, consider forming a small team of 3 to 5 members; a mix of seniority levels is recommended (i.e., at least two categories of MSc student, PhD student, Postdoc, Professor). However, you can also sign up individually. You can list your team members' names when signing up.

We look forward to seeing you on January 19th, 2026, for a day of replication, fun, and refreshments! If you have any questions, please contact Johannes Ullrich or Joanna Rutkowska.

- Patil, P., Peng, R.D., & Leek, J.T. (2019). A visual tool for defining reproducibility and replicability. Nature Human Behaviour, 3, 650–652. https://doi.org/10.1038/s41562-019-0629-z

- Heyard, R. et al. (2025). A scoping review on metrics to quantify reproducibility: a multitude of questions leads to a multitude of metrics. Royal Society of Open Science, 12, 242076. https://doi.org/10.1098/rsos.242076

More Events

- We would like to draw your attention to the UZH Research Synthesis Half-Day , organized by the CRS, taking place on Friday, December 12th, 2025, from 13:00 to 17:00 at the UZH City Campus. This event aims to unite research synthesizers from various faculties and disciplines at UZH to exchange experiences and methods, identify gaps and future needs, and establish a foundation for enhanced collaboration and training opportunities at CRS and UZH. The deadline for contributions is October 1st, 2025, and the registration deadline is November 1, 2025.

- There are some very interesting talks coming up as part of the ReproducibiliTea, jointly organized by the universities of Basel and Zurich. Check out their detailed program: https://www.crs.uzh.ch/en/training/ReproducibiliTea.html

Final words

This newsletter is published once each semester. If you have any questions about it, feel free to contact us at openscience@psychologie.uzh.ch.

Since the newsletter is only released once per semester, we cannot inform you about events scheduled on short notice. Therefore, we recommend subscribing to the mailing list of UZH’s Center for Reproducible Science to stay updated on further training opportunities and scientific exchanges related to open science at UZH, such as the ReproducibiliTea Journal Club.

Current members of the open science initiative

Prof. Dr. Johannes Ullrich (Leitung); Dr. Walter Bierbauer; Prof. Dr. Renato Frey; Dr. Martin Götz; M.Sc. Patrick Höhener; Dr. Sebastian Horn; Dr. Lisa Kaufmann; M.Sc. Sophie Kittelberger; Dr. André Kretzschmar; M.Sc. Pascal Küng; Prof. Dr. Nicolas Langer; Dr. Susan Mérillat; Dr. Joanna Rutkowska; Dr. Robin Segerer; Prof. Dr. Carolin Strobl; Dr. Lisa Wagner; M.Sc. Jasmin Weber; Dr. Katharina Weitkamp; M.Sc. Natascha Wettstein